Neural net processors (NPUs) are specialized processors optimized for AI and machine learning, offering enhanced performance, energy efficiency, and real-time processing capabilities, crucial for applications in AI, edge computing, healthcare, and gaming.

This article delves into what it means when your CPU is a neural net processor, exploring its capabilities, applications, and benefits.

Understanding Neural Net Processors:

What is a Neural Net Processor?

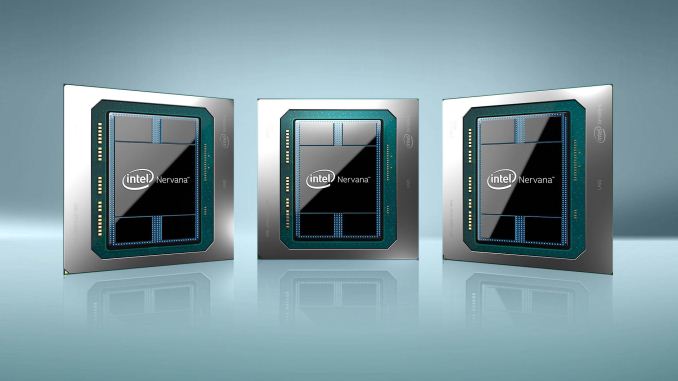

A neural net processor, often referred to as a neural processing unit (NPU), is a specialized type of processor designed to accelerate the performance of neural network computations.

Unlike conventional CPUs, which handle a wide range of tasks, NPUs are optimized for specific AI-related operations such as deep learning inference and training. They are built to handle the massive parallelism required by AI algorithms, which involve processing large datasets and complex mathematical computations.

How Does a Neural Net Processor Work?

Neural net processors work by leveraging parallel processing capabilities to handle the many simultaneous computations involved in neural network operations. Traditional CPUs might struggle with these tasks due to their general-purpose design, which is optimized for sequential task execution.

NPUs, however, are equipped with numerous small cores that can operate concurrently, providing significant performance improvements for AI workloads. For instance, when processing an image recognition task, an NPU can process multiple parts of the image simultaneously, leading to faster and more efficient recognition. This parallelism is crucial for tasks like real-time video processing, natural language processing, and autonomous driving, where speed and efficiency are paramount.

Applications of Neural Net Processors:

Artificial Intelligence and Machine Learning:

The most prominent application of neural net processors is in AI and ML. These processors are used to accelerate the training and inference phases of neural networks, enabling faster development and deployment of AI models.

Companies like Google, Apple, and NVIDIA have integrated NPUs into their devices and platforms to enhance AI capabilities. For example, Google’s Tensor Processing Unit (TPU) is designed specifically for accelerating AI workloads in their data centers. Apple’s Neural Engine, part of their A-series chips, enhances on-device AI processing for features like Face ID and augmented reality.

Edge Computing:

Neural net processors are also critical in edge computing, where processing is performed locally on devices rather than in centralized data centers. This is particularly important for Internet of Things (IoT) devices, autonomous vehicles, and smart cameras, which require real-time data processing and decision-making capabilities.

Also Read: Is Blender Cpu Or Gpu Intensive – CPU and GPU Performance Tips!

Healthcare and Biomedical Applications:

In healthcare, NPUs are used to accelerate tasks such as medical image analysis, drug discovery, and genomics. By processing complex data more efficiently, neural net processors help in developing personalized treatment plans and advancing medical research.

Gaming and Graphics:

In the gaming industry, NPUs contribute to more realistic graphics and improved AI behaviors in games. They help in rendering complex scenes, real-time ray tracing, and simulating realistic physics, enhancing the overall gaming experience.

Benefits of Neural Net Processors:

Enhanced Performance:

One of the primary benefits of neural net processors is their enhanced performance for AI and ML tasks. By offloading these tasks from the CPU to the NPU, systems can achieve faster and more efficient processing, leading to improved application performance and user experience.

Energy Efficiency:

Neural net processors are designed to be energy efficient, which is crucial for mobile and embedded devices. By optimizing power consumption while maintaining high performance, NPUs extend battery life and reduce heat generation, making them ideal for smartphones, tablets, and other portable devices.

Real-time Processing:

The ability to perform real-time processing is another significant advantage of NPUs. This is essential for applications such as autonomous driving, where split-second decisions are necessary for safety and functionality. NPUs provide the computational power required to analyze sensor data and make decisions instantaneously.

Scalability:

Neural net processors offer scalability, allowing systems to handle increasingly complex AI models and larger datasets. This scalability is vital for future-proofing technology and supporting the growing demands of AI and ML applications.

Optimizing Your System with Neural Net Processors:

Choosing the Right Hardware:

When selecting a system with a neural net processor, consider the specific requirements of your applications. For AI and ML tasks, look for NPUs with high computational power and support for popular frameworks like TensorFlow and PyTorch. For edge computing, prioritize energy efficiency and real-time processing capabilities.

Integrating with Existing Systems:

Integrating NPUs with existing systems involves ensuring compatibility with other components, such as memory, storage, and network interfaces. It’s important to choose hardware and software that work seamlessly together to maximize performance and efficiency.

Software Optimization:

Optimizing software to take full advantage of neural net processors involves using AI frameworks and libraries that support hardware acceleration. This includes utilizing APIs and development tools provided by NPU manufacturers to streamline the integration and optimization process.

Future of Neural Net Processors:

The future of neural net processors looks promising, with ongoing advancements in AI and ML driving innovation. As AI applications become more pervasive, the demand for powerful and efficient NPUs will continue to grow. Future developments may include even greater integration with CPUs and GPUs, improved energy efficiency, and enhanced support for a broader range of AI models and applications.

FAQs

1. What is a neural net processor?

A neural net processor, or NPU, is a specialized type of processor designed to accelerate neural network computations, optimized for AI-related tasks such as deep learning inference and training.

2. How does a neural net processor work?

Neural net processors leverage parallel processing capabilities to handle the simultaneous computations involved in neural network operations, providing significant performance improvements over traditional CPUs.

3. What are the primary applications of neural net processors?

NPUs are primarily used in artificial intelligence and machine learning, edge computing, healthcare, and gaming to accelerate tasks, enhance real-time processing, and improve overall system performance.

4. What are the benefits of using a neural net processor?

The main benefits of using NPUs include enhanced performance for AI and ML tasks, energy efficiency, real-time processing capabilities, and scalability to handle increasingly complex AI models and larger datasets.

5. How can I optimize my system with a neural net processor?

To optimize your system with an NPU, choose the right hardware for your specific applications, ensure compatibility with existing system components, and use AI frameworks and libraries that support hardware acceleration.

Conclusion

Neural net processors represent a significant leap forward in computing technology, offering unparalleled performance and efficiency for AI and ML applications. By understanding the capabilities and benefits of NPUs, you can make informed decisions about integrating them into your systems, whether for personal use, business applications, or cutting-edge research. As the technology continues to evolve, neural net processors will undoubtedly play a crucial role in shaping the future of computing.